What is Continuous Integration ?

Continuous Integration is a software development practice where members of a team integrate their work frequently, usually each person integrates at least daily - leading to multiple integrations per day. Each integration is verified by an automated build (including test) to detect integration errors as quickly as possible. Many teams find that this approach leads to significantly reduced integration problems and allows a team to develop cohesive software more rapidly. (Martin Fowler)

What is Jenkins ?

Jenkins is a highly popular open-source continuous integration server written in the Java for testing

and reporting on isolated changes in a larger code base in real time. Jenkins enables

developers to find and solve defects in a code base rapidly and to automate testing of their

builds.

How Jenkins can help Testers ?

Working in an Agile environment as a Tester you need robust process where you can run your automated test efficiently (without manual intervention), publish test execution reports which should be accessible to all stakeholders and save test execution reports as artifacts which should be available any time for comparison with previous reports.

As a tester you can integrate your sanity, functional and load/performance tests in Jenkins which can be easily run on (CI, Test, Staging and Performance) environments, schedule to be run for any time, save test results reports for analysis/review and also decide whether build is stable/unstable or fail.

By integrating automated tests in Jenkins we can save lots of time, provide quick feedback to developers and spend more time in writing more tests to increase the test coverage (which is your ultimate goal).

In Jenkins every task/operation is consider as a Job and and everything that is done

through Jenkins can be thought of as having a few discreet components:

- Triggers - What causes a job to be run

- Location - Where do we run a job

- Steps - What actions are taken when the job runs

- Results - What is the outcome of the job

While creating jobs in Jenkins you need to be very careful for above components.

Let's see an example of JMeter test which is integrated in Jenkins and running against CI build.

Prerequisite:

- JDK should be installed.

- Jenkins should be installed and running to deploy the builds.

- Apache-ant.

- Apache-JMeter.

1. Open Jenkins select the view/project (optional) and click on the "New Job" link.

2. Enter the Job name and select "Build a free-style software project" and click OK.

You can also create new job by copying settings from an existing job, to do so you need to click "Copy existing Job" option, give the name in copy from text field and click OK.

3. Now you can see job configuration page is open.

4. Give brief description about the job you are going to create.

5. Select the available JDK version which you want to use for this job.

6. Select source code management and give link where your source code resides.

7. Enter you local directory name (optional).

8. Under the "Build Triggers" you can specify multiple criteria to trigger this job. If you want to run your sanity tests after project is built than select "Build after other projects are built" option. In my case i want Jenkins to run this job every day 7am. So i am selecting "Build periodically" and specifying the time.

9. Select "Add timestamps to the Console Output" under Build Environment, so you can see timestamps and trace the errors easily.

10. Under the Build section click "Add build step" drop down and select "Invoke Ant". I will be using Ant to run the jmeter tests ( check my last post in which i have explained how to run jmeter tests with ant ).

11. Select the available ant version which you are going to use from the "ant version" drop down menu.

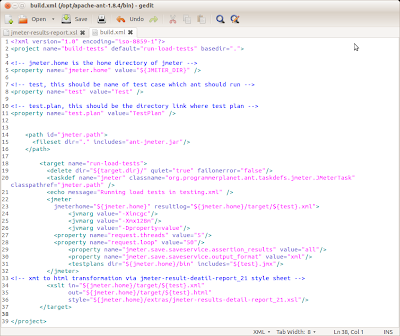

12. Give the absolute path of your build.xml file in the "Build file" text field.

13. Specify your ant properties based on build.xml. ( This will be your command line ant script )

14. Now select "Post build-action" for your job.

15. I want to publish report so i am selecting "Publish performance test result report" (This is an additional plugin/add-on for Jenkins for JMeter so you need to install it separately)

But you can customize this job as much as you can. You can set-up Email Notifications, and will receive email if build is failed/unstable. You can also customise the email notification message and many more.

16. Select the type of report you need to publish (Jmeter).

17. Give the absolute path of your report files.

18. Specify the error percentage threshold that set the build unstable or failed.

21. As this build is schedule to be run everyday 7am so if you want to run it first time manually (to check job is created properly) than click "Build Now".

22. Once you click the "Build Now" link you will see your job is started and progress bar will appear.

23. To see what actually has been running, check the console. Click on the progress bar and click "console output", this will show you your build xml steps and project checkout steps in detail with timestamps.

24. Once job is successfully completed without errors, you will see "BUILD SUCCESSFUL" written in your console output logs, if build is stable and not failed green sign will appear.

25. To see the report of your test, click on the "Performance report" link which will open the detail report.

26. Report will appear like this.

24. Once job is successfully completed without errors, you will see "BUILD SUCCESSFUL" written in your console output logs, if build is stable and not failed green sign will appear.

25. To see the report of your test, click on the "Performance report" link which will open the detail report.

26. Report will appear like this.

We can create multiple jobs like this (Sanity, Functional, Regression, Performance and GUI), which can be run easily on any specified (CI, Test, Performance) environment(s).

I hope this detail wizard could help you in creating jobs in Jenkins.